本文是通过学习 AI研习社 炼丹兄 所做笔记!!!

1.1 .grad

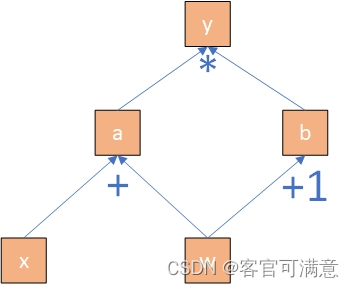

例:,计算y关于w的梯度:

(上式计算中,)

import torch

w = torch.tensor([1.],requires_grad = True)

x = torch.tensor([2.],requires_grad = True)a = w + x

b = w + 1

y = a * by.backward()

print(w.grad)1.2 .is_leaf

例:,计算y关于w的梯度:

(上式计算中,)

import torch

w = torch.tensor([1.],requires_grad = True)

x = torch.tensor([2.],requires_grad = True)a = w + x

b = w + 1

y = a * by.backward()

print(w.is_leaf,x.is_leaf,a.is_leaf,b.is_leaf,y.is_leaf)

print(w.grad,x.grad,a.grad,b.grad,y.grad) 1.3 .retain_grad()

例:,计算y关于w的梯度:

(上式计算中,)

import torch

w = torch.tensor([1.],requires_grad = True)

x = torch.tensor([2.],requires_grad = True)a = w + x

a.retain_grad()

b = w + 1

y = a * by.backward()

print(w.is_leaf,x.is_leaf,a.is_leaf,b.is_leaf,y.is_leaf)

print(w.grad,x.grad,a.grad,b.grad,y.grad)1.4 .grad_fn

例:,计算y关于w的梯度:

(上式计算中,)

import torchw = torch.tensor([1.],requires_grad = True)

x = torch.tensor([2.],requires_grad = True)a = w + x

a.retain_grad()

b = w + 1

y = a * by.backward()

print(y.grad_fn)

print(a.grad_fn)

print(w.grad_fn)本文链接:https://my.lmcjl.com/post/11265.html

展开阅读全文

4 评论