年关将近,工作上该完成的都差不多了,上午闲着就接触学习了一下爬虫,抽空还把正则表达式复习了,Java的Regex和JS上还是有区别的,JS上的"\w"Java得写成"\\w",因为Java会对字符串中的"\"做转义,还有JS中"\S\s"的写法(指任意多的任意字符),Java可以写成".*"

博主刚接触爬虫,参考了许多博客和问答贴,先写个爬虫的Overview让朋友们对其有些印象,之后我们再展示代码.

网络爬虫的基本原理:

网络爬虫的基本工作流程如下:

1.首先选取一部分精心挑选的种子URL;

2.将这些URL放入待抓取URL队列;

3.从待抓取URL队列中取出待抓取在URL,解析DNS,并且得到主机的ip,并将URL对应的网页下载下来,存储进已下载网页库中。此外,将这些URL放进已抓取URL队列。

4.分析已抓取URL队列中的URL,分析其中的其他URL,并且将URL放入待抓取URL队列,从而进入下一个循环。

网络爬虫的抓取策略有:深度优先遍历,广度优先遍历(是不是想到了图的深度和广度优先遍历?),Partial PageRank,OPIC策略,大站优先等;

博主采用的是实现起来比较简单的广度优先遍历,即获取一个页面中所有的URL后,将之塞进URL队列,于是循环条件就是该队列非空.

网络爬虫(HelloWorld版)

入口类:

package com.example.spiderman.page;

/*** @author YHW* @ClassName: MyCrawler* @Description:*/ import com.example.spiderman.link.LinkFilter; import com.example.spiderman.link.Links; import com.example.spiderman.page.Page; import com.example.spiderman.page.PageParserTool; import com.example.spiderman.page.RequestAndResponseTool; import com.example.spiderman.utils.FileTool; import com.example.spiderman.utils.RegexRule; import org.jsoup.select.Elements;import java.util.Set;public class MyCrawler {/*** 使用种子初始化 URL 队列** @param seeds 种子 URL* @return*/private void initCrawlerWithSeeds(String[] seeds) {for (int i = 0; i < seeds.length; i++){Links.addUnvisitedUrlQueue(seeds[i]);}}/*** 抓取过程** @param seeds* @return*/public void crawling(String[] seeds) {//初始化 URL 队列 initCrawlerWithSeeds(seeds);//定义过滤器,提取以 变量url 开头的链接LinkFilter filter = new LinkFilter() {@Overridepublic boolean accept(String url) {if (url.startsWith("https://www.cnblogs.com/Joey44/"))return true;elsereturn false;}};//循环条件:待抓取的链接不空且抓取的网页不多于 1000while (!Links.unVisitedUrlQueueIsEmpty() && Links.getVisitedUrlNum() <= 1000) {//先从待访问的序列中取出第一个;String visitUrl = (String) Links.removeHeadOfUnVisitedUrlQueue();if (visitUrl == null){continue;}//根据URL得到page;Page page = RequestAndResponseTool.sendRequstAndGetResponse(visitUrl);//对page进行处理: 访问DOM的某个标签Elements es = PageParserTool.select(page,"a");if(!es.isEmpty()){System.out.println("下面将打印所有a标签: ");System.out.println(es);}//将保存文件 FileTool.saveToLocal(page);//将已经访问过的链接放入已访问的链接中; Links.addVisitedUrlSet(visitUrl);//得到超链接Set<String> links = PageParserTool.getLinks(page,"a");for (String link : links) {

//遍历链接集合,并用正则过滤出需要的URL,再存入URL队列中去,博主这里筛出了所有博主博客相关的URLRegexRule regexRule = new RegexRule();regexRule.addPositive("http.*/Joey44/.*html.*");

if(regexRule.satisfy(link)){Links.addUnvisitedUrlQueue(link);System.out.println("新增爬取路径: " + link);}}}}//main 方法入口public static void main(String[] args) {MyCrawler crawler = new MyCrawler();crawler.crawling(new String[]{"https://www.cnblogs.com/Joey44/"});} }

网络编程自然少不了Http访问,直接用apache的httpclient包就行:

package com.example.spiderman.page;/*** @author YHW* @ClassName: RequestAndResponseTool* @Description:*/import org.apache.commons.httpclient.DefaultHttpMethodRetryHandler; import org.apache.commons.httpclient.HttpClient; import org.apache.commons.httpclient.HttpException; import org.apache.commons.httpclient.HttpStatus; import org.apache.commons.httpclient.methods.GetMethod; import org.apache.commons.httpclient.params.HttpMethodParams;import java.io.IOException;public class RequestAndResponseTool {public static Page sendRequstAndGetResponse(String url) {Page page = null;// 1.生成 HttpClinet 对象并设置参数HttpClient httpClient = new HttpClient();// 设置 HTTP 连接超时 5shttpClient.getHttpConnectionManager().getParams().setConnectionTimeout(5000);// 2.生成 GetMethod 对象并设置参数GetMethod getMethod = new GetMethod(url);// 设置 get 请求超时 5sgetMethod.getParams().setParameter(HttpMethodParams.SO_TIMEOUT, 5000);// 设置请求重试处理getMethod.getParams().setParameter(HttpMethodParams.RETRY_HANDLER, new DefaultHttpMethodRetryHandler());// 3.执行 HTTP GET 请求try {int statusCode = httpClient.executeMethod(getMethod);// 判断访问的状态码if (statusCode != HttpStatus.SC_OK) {System.err.println("Method failed: " + getMethod.getStatusLine());}// 4.处理 HTTP 响应内容byte[] responseBody = getMethod.getResponseBody();// 读取为字节 数组String contentType = getMethod.getResponseHeader("Content-Type").getValue(); // 得到当前返回类型page = new Page(responseBody,url,contentType); //封装成为页面} catch (HttpException e) {// 发生致命的异常,可能是协议不对或者返回的内容有问题System.out.println("Please check your provided http address!");e.printStackTrace();} catch (IOException e) {// 发生网络异常 e.printStackTrace();} finally {// 释放连接 getMethod.releaseConnection();}return page;} }

别忘了导入Maven依赖:

<!-- https://mvnrepository.com/artifact/commons-httpclient/commons-httpclient --><dependency><groupId>commons-httpclient</groupId><artifactId>commons-httpclient</artifactId><version>3.0</version></dependency>

接下来要让我们的程序可以存儲页面,新建Page实体类:

package com.example.spiderman.page;/*** @author YHW* @ClassName: Page* @Description:*/ import com.example.spiderman.utils.CharsetDetector; import org.jsoup.Jsoup; import org.jsoup.nodes.Document;import java.io.UnsupportedEncodingException;/** page* 1: 保存获取到的响应的相关内容;* */ public class Page {private byte[] content ;private String html ; //网页源码字符串private Document doc ;//网页Dom文档private String charset ;//字符编码private String url ;//url路径private String contentType ;// 内容类型public Page(byte[] content , String url , String contentType){this.content = content ;this.url = url ;this.contentType = contentType ;}public String getCharset() {return charset;}public String getUrl(){return url ;}public String getContentType(){ return contentType ;}public byte[] getContent(){ return content ;}/*** 返回网页的源码字符串** @return 网页的源码字符串*/public String getHtml() {if (html != null) {return html;}if (content == null) {return null;}if(charset==null){charset = CharsetDetector.guessEncoding(content); // 根据内容来猜测 字符编码 }try {this.html = new String(content, charset);return html;} catch (UnsupportedEncodingException ex) {ex.printStackTrace();return null;}}/** 得到文档* */public Document getDoc(){if (doc != null) {return doc;}try {this.doc = Jsoup.parse(getHtml(), url);return doc;} catch (Exception ex) {ex.printStackTrace();return null;}}}

然后要有页面的解析功能类:

package com.example.spiderman.page;/*** @author YHW* @ClassName: PageParserTool* @Description:*/ import org.jsoup.nodes.Element; import org.jsoup.select.Elements;import java.util.ArrayList; import java.util.HashSet; import java.util.Iterator; import java.util.Set;public class PageParserTool {/* 通过选择器来选取页面的 */public static Elements select(Page page , String cssSelector) {return page.getDoc().select(cssSelector);}/** 通过css选择器来得到指定元素;** */public static Element select(Page page , String cssSelector, int index) {Elements eles = select(page , cssSelector);int realIndex = index;if (index < 0) {realIndex = eles.size() + index;}return eles.get(realIndex);}/*** 获取满足选择器的元素中的链接 选择器cssSelector必须定位到具体的超链接* 例如我们想抽取id为content的div中的所有超链接,这里* 就要将cssSelector定义为div[id=content] a* 放入set 中 防止重复;* @param cssSelector* @return*/public static Set<String> getLinks(Page page ,String cssSelector) {Set<String> links = new HashSet<String>() ;Elements es = select(page , cssSelector);Iterator iterator = es.iterator();while(iterator.hasNext()) {Element element = (Element) iterator.next();if ( element.hasAttr("href") ) {links.add(element.attr("abs:href"));}else if( element.hasAttr("src") ){links.add(element.attr("abs:src"));}}return links;}/*** 获取网页中满足指定css选择器的所有元素的指定属性的集合* 例如通过getAttrs("img[src]","abs:src")可获取网页中所有图片的链接* @param cssSelector* @param attrName* @return*/public static ArrayList<String> getAttrs(Page page , String cssSelector, String attrName) {ArrayList<String> result = new ArrayList<String>();Elements eles = select(page ,cssSelector);for (Element ele : eles) {if (ele.hasAttr(attrName)) {result.add(ele.attr(attrName));}}return result;} }

别忘了导入Maven依赖:

<!-- https://mvnrepository.com/artifact/org.jsoup/jsoup --><dependency><groupId>org.jsoup</groupId><artifactId>jsoup</artifactId><version>1.11.3</version></dependency>

最后就是额外需要的一些工具类:正则匹配工具,页面编码侦测和存儲页面工具

package com.example.spiderman.utils;/*** @author YHW* @ClassName: RegexRule* @Description:*/ import java.util.ArrayList; import java.util.regex.Pattern; public class RegexRule {public RegexRule(){}public RegexRule(String rule){addRule(rule);}public RegexRule(ArrayList<String> rules){for (String rule : rules) {addRule(rule);}}public boolean isEmpty(){return positive.isEmpty();}private ArrayList<String> positive = new ArrayList<String>();private ArrayList<String> negative = new ArrayList<String>();/*** 添加一个正则规则 正则规则有两种,正正则和反正则* URL符合正则规则需要满足下面条件: 1.至少能匹配一条正正则 2.不能和任何反正则匹配* 正正则示例:+a.*c是一条正正则,正则的内容为a.*c,起始加号表示正正则* 反正则示例:-a.*c时一条反正则,正则的内容为a.*c,起始减号表示反正则* 如果一个规则的起始字符不为加号且不为减号,则该正则为正正则,正则的内容为自身* 例如a.*c是一条正正则,正则的内容为a.*c* @param rule 正则规则* @return 自身*/public RegexRule addRule(String rule) {if (rule.length() == 0) {return this;}char pn = rule.charAt(0);String realrule = rule.substring(1);if (pn == '+') {addPositive(realrule);} else if (pn == '-') {addNegative(realrule);} else {addPositive(rule);}return this;}/*** 添加一个正正则规则* @param positiveregex* @return 自身*/public RegexRule addPositive(String positiveregex) {positive.add(positiveregex);return this;}/*** 添加一个反正则规则* @param negativeregex* @return 自身*/public RegexRule addNegative(String negativeregex) {negative.add(negativeregex);return this;}/*** 判断输入字符串是否符合正则规则* @param str 输入的字符串* @return 输入字符串是否符合正则规则*/public boolean satisfy(String str) {int state = 0;for (String nregex : negative) {if (Pattern.matches(nregex, str)) {return false;}}int count = 0;for (String pregex : positive) {if (Pattern.matches(pregex, str)) {count++;}}if (count == 0) {return false;} else {return true;}} }

package com.example.spiderman.utils;/*** @author YHW* @ClassName: CharsetDetector* @Description:*/import org.mozilla.universalchardet.UniversalDetector;import java.io.UnsupportedEncodingException; import java.util.regex.Matcher; import java.util.regex.Pattern;/*** 字符集自动检测**/ public class CharsetDetector {//从Nutch借鉴的网页编码检测代码private static final int CHUNK_SIZE = 2000;private static Pattern metaPattern = Pattern.compile("<meta\\s+([^>]*http-equiv=(\"|')?content-type(\"|')?[^>]*)>",Pattern.CASE_INSENSITIVE);private static Pattern charsetPattern = Pattern.compile("charset=\\s*([a-z][_\\-0-9a-z]*)", Pattern.CASE_INSENSITIVE);private static Pattern charsetPatternHTML5 = Pattern.compile("<meta\\s+charset\\s*=\\s*[\"']?([a-z][_\\-0-9a-z]*)[^>]*>",Pattern.CASE_INSENSITIVE);//从Nutch借鉴的网页编码检测代码private static String guessEncodingByNutch(byte[] content) {int length = Math.min(content.length, CHUNK_SIZE);String str = "";try {str = new String(content, "ascii");} catch (UnsupportedEncodingException e) {return null;}Matcher metaMatcher = metaPattern.matcher(str);String encoding = null;if (metaMatcher.find()) {Matcher charsetMatcher = charsetPattern.matcher(metaMatcher.group(1));if (charsetMatcher.find()) {encoding = new String(charsetMatcher.group(1));}}if (encoding == null) {metaMatcher = charsetPatternHTML5.matcher(str);if (metaMatcher.find()) {encoding = new String(metaMatcher.group(1));}}if (encoding == null) {if (length >= 3 && content[0] == (byte) 0xEF&& content[1] == (byte) 0xBB && content[2] == (byte) 0xBF) {encoding = "UTF-8";} else if (length >= 2) {if (content[0] == (byte) 0xFF && content[1] == (byte) 0xFE) {encoding = "UTF-16LE";} else if (content[0] == (byte) 0xFE&& content[1] == (byte) 0xFF) {encoding = "UTF-16BE";}}}return encoding;}/*** 根据字节数组,猜测可能的字符集,如果检测失败,返回utf-8** @param bytes 待检测的字节数组* @return 可能的字符集,如果检测失败,返回utf-8*/public static String guessEncodingByMozilla(byte[] bytes) {String DEFAULT_ENCODING = "UTF-8";UniversalDetector detector = new UniversalDetector(null);detector.handleData(bytes, 0, bytes.length);detector.dataEnd();String encoding = detector.getDetectedCharset();detector.reset();if (encoding == null) {encoding = DEFAULT_ENCODING;}return encoding;}/*** 根据字节数组,猜测可能的字符集,如果检测失败,返回utf-8* @param content 待检测的字节数组* @return 可能的字符集,如果检测失败,返回utf-8*/public static String guessEncoding(byte[] content) {String encoding;try {encoding = guessEncodingByNutch(content);} catch (Exception ex) {return guessEncodingByMozilla(content);}if (encoding == null) {encoding = guessEncodingByMozilla(content);return encoding;} else {return encoding;}} }

package com.example.spiderman.utils;/*** @author YHW* @ClassName: FileTool* @Description:*/ import com.example.spiderman.page.Page;import java.io.DataOutputStream; import java.io.File; import java.io.FileOutputStream; import java.io.IOException;/* 本类主要是 下载那些已经访问过的文件*/ public class FileTool {private static String dirPath;/*** getMethod.getResponseHeader("Content-Type").getValue()* 根据 URL 和网页类型生成需要保存的网页的文件名,去除 URL 中的非文件名字符*/private static String getFileNameByUrl(String url, String contentType) {//去除 http:// url = url.substring(7);//text/html 类型if (contentType.indexOf("html") != -1) {url = url.replaceAll("[\\?/:*|<>\"]", "_") + ".html";return url;}//如 application/pdf 类型else {return url.replaceAll("[\\?/:*|<>\"]", "_") + "." +contentType.substring(contentType.lastIndexOf("/") + 1);}}/** 生成目录* */private static void mkdir() {if (dirPath == null) {dirPath = Class.class.getClass().getResource("/").getPath() + "temp\\";}File fileDir = new File(dirPath);if (!fileDir.exists()) {fileDir.mkdir();}}/*** 保存网页字节数组到本地文件,filePath 为要保存的文件的相对地址*/public static void saveToLocal(Page page) {mkdir();String fileName = getFileNameByUrl(page.getUrl(), page.getContentType()) ;String filePath = dirPath + fileName ;byte[] data = page.getContent();try {//Files.lines(Paths.get("D:\\jd.txt"), StandardCharsets.UTF_8).forEach(System.out::println);DataOutputStream out = new DataOutputStream(new FileOutputStream(new File(filePath)));for (int i = 0; i < data.length; i++) {out.write(data[i]);}out.flush();out.close();System.out.println("文件:"+ fileName + "已经被存储在"+ filePath );} catch (IOException e) {e.printStackTrace();}}}

为了拓展性,增加一个LinkFilter接口:

package com.example.spiderman.link;/*** @author YHW* @ClassName: LinkFilter* @Description:*/ public interface LinkFilter {public boolean accept(String url); }

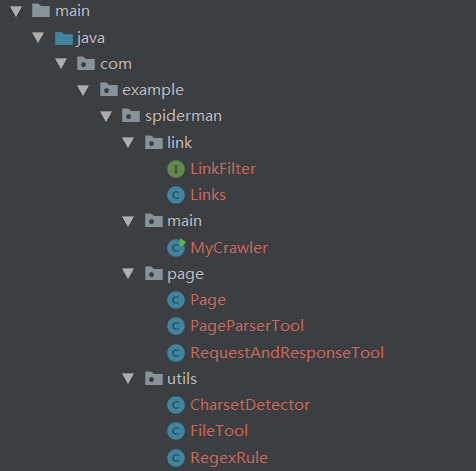

整体项目结构:

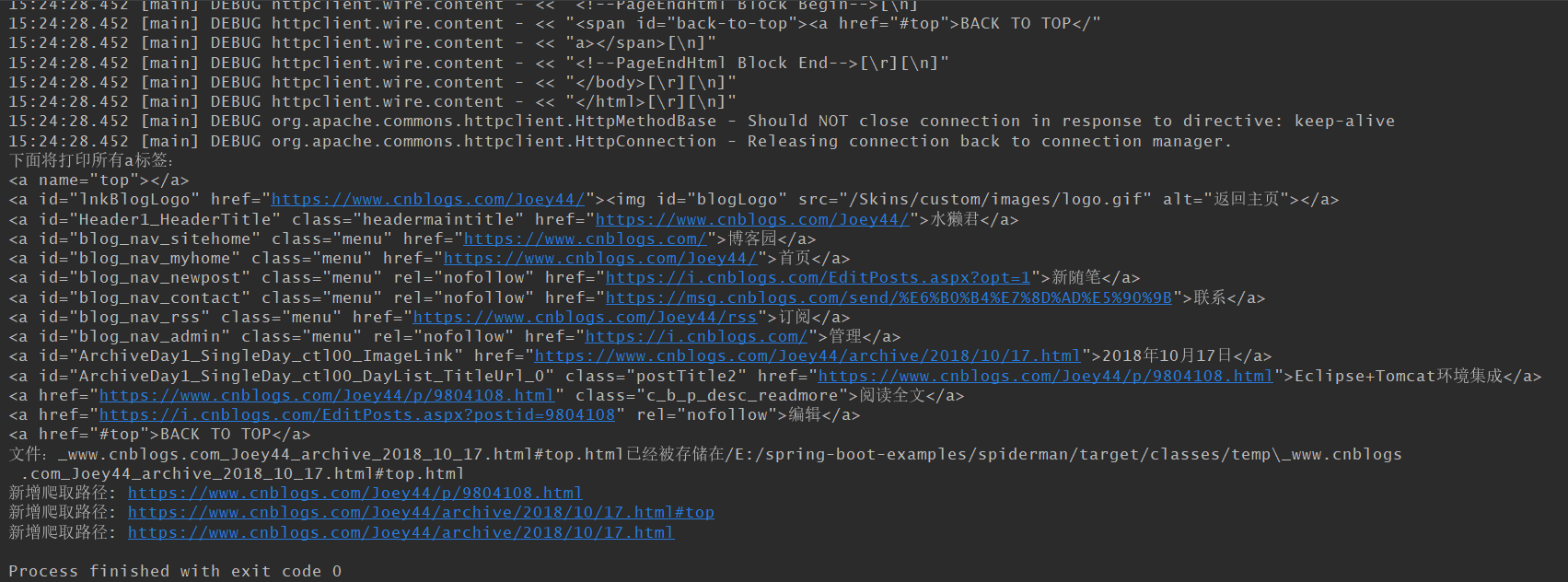

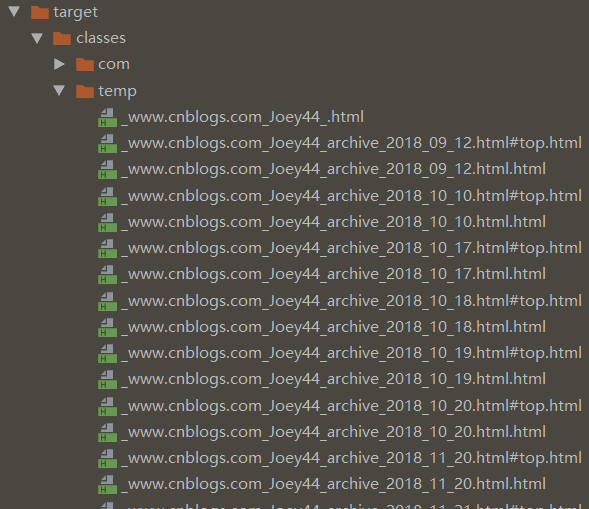

运行结果(部分):

网络爬虫的Helloworld版本就完成了,当然还有许多可以改进的地方,例如多线程访问URL队列;在我们获取网站源码之后,就可以利用解析工具获取该页面任意的元素标签,再进行正则表达式过滤出我们想要的数据了,可以将数据存储到数据库里,还可以根据需要使用分布式爬虫提高爬取速率.

参考文章:

https://www.cnblogs.com/wawlian/archive/2012/06/18/2553061.html

https://www.cnblogs.com/sanmubird/p/7857474.html

转载于:https://www.cnblogs.com/Joey44/p/10320377.html

本文链接:https://my.lmcjl.com/post/13077.html

4 评论